I have been working with Rust for a few years now, primarily during my retirement. I’ve authored a few personal tools with it, and I’ve come to prefer working with it rather than the latest C++ compilers from GCC or Clang/LLVM. I find it expressive in ways that C++ isn’t. But Rust, like C++, isn’t perfect and it does have its quirks.

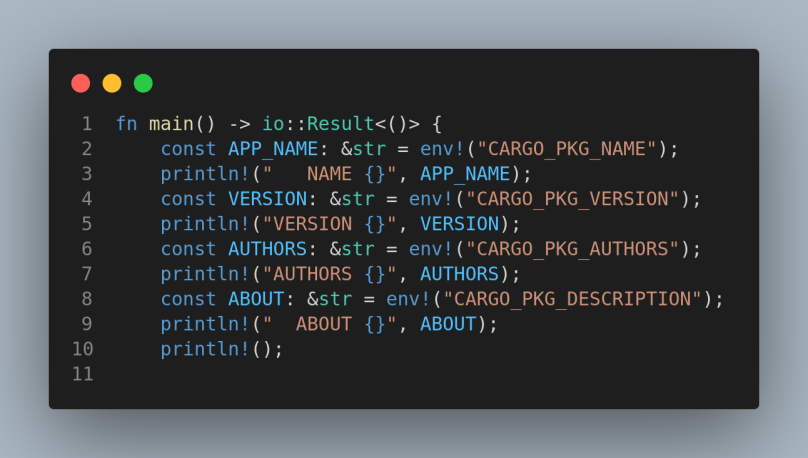

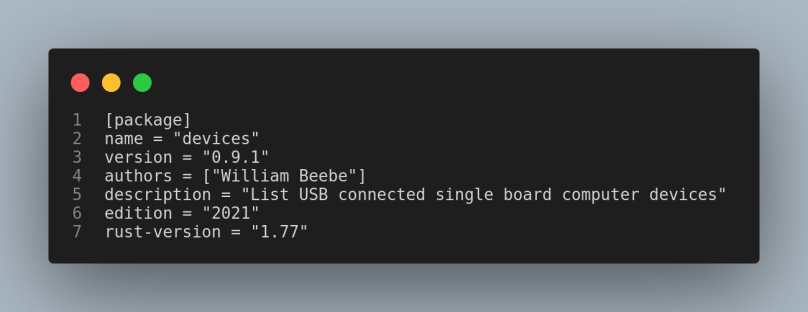

One of those quirks I first encountered involved an easy way to access elements in the Cargo.toml file within the Rust application itself. As I moved deeper into Rust I turned to a number of books on the language. One of those books showed how Rust worked by re-writing certain Unix/Linux command line tools in Rust. A subset of that book was command line argument processing, such as passing a version flag to the utility to check the version of the tool. I quickly noticed that the example Rust code duplicated information that was also defined in the toml file, such as the application’s name, version, author(s), and description. From decades of prior software experience the last thing you want to do is duplicate information/data between different files. Instead you want to define data in one location, in one file, and then reference that everywhere in the application and system where you need it. After a bit of searching I found one way to reference that toml information; see listing 1 above referencing the first four definitions in listing 2’s [package] section.

There’s probably a better way to reference toml information, but I used Rust’s built-in environment macros to reference this data because I learned that when you run a Rust application, that toml information is placed in the running Rust application’s environment as environmental variables. For me this is good enough.

By the way, this post marks my use of the Visual Studio Code plugin CodeSnap to create a visual snapshot of code. I’ve grown tired of trying to use WordPress’ built-in code support. The reference page for how to use it has disappeared, and even when I have used it, I can’t get decent code syntax highlighting. So this is probably what I’ll use from now on.

Links

Rust Environmental Variables — https://doc.rust-lang.org/cargo/reference/environment-variables.html

You must be logged in to post a comment.